This past week, I attended the AUTOTESTCON 2019 conference, the premiere Defense Automated Test Equipment show, that has the theme of “increased mission effectiveness through advanced test and support technology”. As you may have seen, I was honored with the “Walter E. Peterson Best Paper on New Technology” award for Mitigating JTAG as an Attack Surface (note: it might take a little while for the paper to be posted on IEEE Xplore; you might have to check back later).

AUTOTESTCON is a great learning venue and a successful, growing conference. On the topic of JTAG, there was also a fascinating cybersecurity paper and presentation by Al Crouch of Amida that I’d like to cover here.

Al’s paper has the long-ish title of A Role for Embedded Instrumentation in Real-Time Hardware Assurance and Online Monitoring against Cybersecurity Threats (again, you might have to wait for a while for the paper to be posted on IEEE Xplore). Co-authored with our chief technology officer, Adam Ley, the paper describes research on using JTAG-based embedded instrumentation to detect and protect against malware at the silicon level. Let that sink in for a minute. The chips on electronics systems are often considered to be the “final frontier” for cyberattacks. Put another way, we’ve assumed for a long time that semiconductors are at the hardware root of trust, immutable and completely resistant to attacks. But what happens if the chips themselves have been compromised? What if a weakness in the supply chain has inserted a Trojan there? Let’s look at the risks and mitigations.

First, it’s important to explain what kinds of attacks can be mounted at the silicon level. A Trojan payload within a chip can belong to any or all of three broad categories:

- Data Leaker

- Behavior Modifier

- Reliability Impacter

Detecting and protecting against these within semiconductors is a significant challenge. Some real and postulated Trojans can use parametric effects, such as variations in temperature or power consumption, to achieve their nefarious goals. It is generally agreed that mitigations against these attacks also need to be implemented at the silicon level. In other words, embedded Trojan-detection instruments can “fight fire with fire” in this instance. These instruments themselves fall into five main categories, in that they:

- Detect activity at circuit elements that have been designated as unused

- Enforce a time bounds for validity of time-framed operations

- Check for unexpected side-channel effects

- Guard against back-door operations

- Raise hardware assertions or alerts in response to deviations from normal behavioral limits.

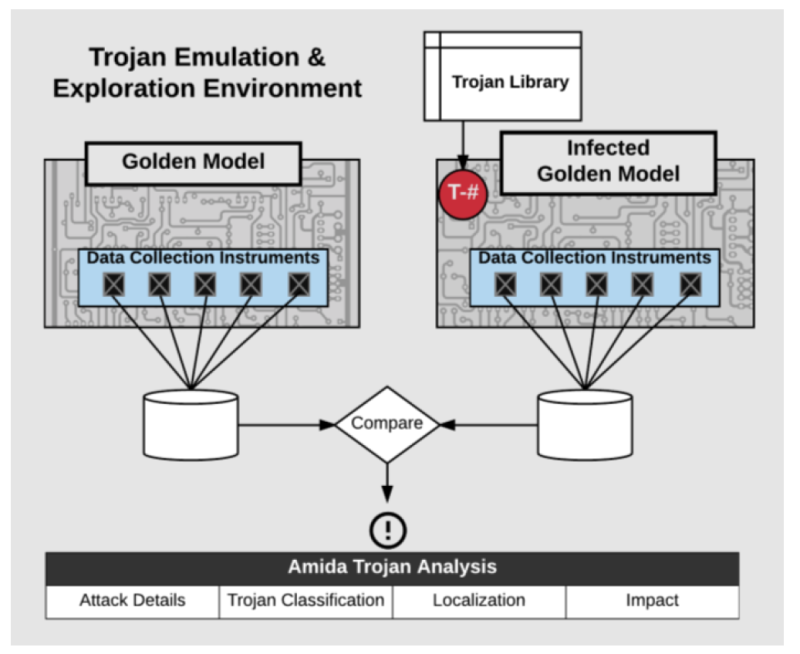

Amida and ASSET are working together to develop technology to detect and mitigate such attacks. It consists of the Amida Trojan Emulation and Exploration Environment (TE3©) with a Sandbox FPGA, Data Collection RTL instruments, a variety of hardware Trojans, Trojan Insertion and Data Collection software (ASSET’s ScanWorks®) and Amida’s supervised machine learning tool. The configuration looks as below:

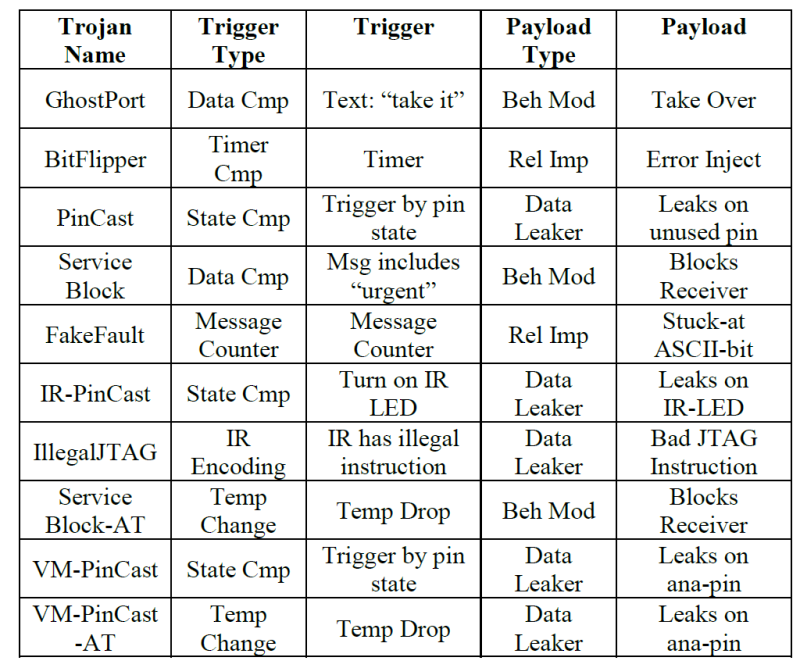

The Trojans that were evaluated in an initial trial of the technology, as well as their trigger and payload characteristics, are as below:

The library of Trojan detection data collection instruments were placed within an IJTAG (IEEE 1687) network in the FPGA. IJTAG segment insertion bits (SIBs) were used to open and close individual scan chains providing access to different instruments, whilst managing power consumption, operational noise and data collection latency. JTAG and IJTAG were used since they operate out-of-band, on bare metal, totally independent of the operating system and mission logic of any hardware platform.

A huge amount of TE3 data has been collected to build up our models, and more is being collected over time. In parallel, more research is underway to detect “unknown” Trojan types (typically through parametric effects) as well as known Trojans. This entails adding more instrumentation to address the broad spectrum of five main categories listed above. To learn more about the specifics, you’ll have to read Al and Adam’s paper. One fascinating example in the “Instrumentation to check for unexpected side-channel effects” category was the chirpMon instrument, that learns to predict the behavior of a keep-alive waveform and its minor variations over thousands of repeats in a learning mode, and then identifies any outlying behavior due to a Trojan in the prediction/operation mode.

Certainly, this is a fascinating area, and expected to gain much more attention over time.