In Part 1, I compared the performance and cost of Intel Cascade Lake versus AMD Rome. In Part 2, I compared these against Amazon’s own Graviton1 CPU. In this article, I benchmarked Ampere Computing’s eMAG, available on Packet.com.

Ampere Computing delivers the eMAG Arm64 CPU, in competition with the Marvell ThunderX and with Amazon’s own Graviton1 chip. And Packet, a bare metal cloud provider, offers the c2.large.arm instance, using the eMAG, with support for 32 cores, 128GB RAM, a 480GB SSD, and 2 x 10Gbps network interface. It was simply too tempting to compare my benchmarks for Ampere against the earlier ones I did with AMD Rome and Intel Cascade Lake.

Disclaimer: I don’t claim these benchmarks to be comprehensive or accurate in any way. If you want rigorous journalism and scientific benchmarks, read some of Michael Larabel’s writing on Packet on phoronix.com. I’m using some of this as a learning experience, and it should be taken as such. And, most importantly, the vendors (AWS and Packet) have distinctly different hardware configurations for each server type – Packet claiming “bare metal” and AWS being more VM-based, in some instances – as you’ll see below.

Now that the disclaimer is out of the way, let’s get to the specifics. Setting up an account and running a server on Packet is surprisingly easy, very similar to logging in with AWS. In about 15 minutes, I had the Ampere machine up and running:

One thing I have to say about Packet: I really enjoyed using the service. Very simple, easy to set up and use. And the fact that it’s running on “bare metal” is an attraction. I found this article to be very good reading: www.packet.com/blog/why-we-cant-wait-for-aws-to-announce-its-bare-metal-product/.

You’ll note that Packet puts up your Linux instance and logs you in as root, which was later found to cause a problem: bitbake doesn’t like to build with root. I had to create another user using the Linux “su –“ command to get around this problem.

As with the Graviton1, you need to first set up the package pre-requisites, a little differently than with x86. Interestingly, with x86 I used “sudo apt-get install gcc-multilib” to get the compiler, with Graviton1 I had to “sudo apt-get install gcc-7-multilib-arm-linux-gnueabi”, but with Ampere I didn’t have to do anything – the compiler came pre-installed with Ubuntu 16.04 LTS. Interesting.

As with Graviton1, I had to add:

INHERIT_remove = “uninative”

to the OpenBMC conf/local.conf file, in order for the bitbake to run and prevent the uninative error.

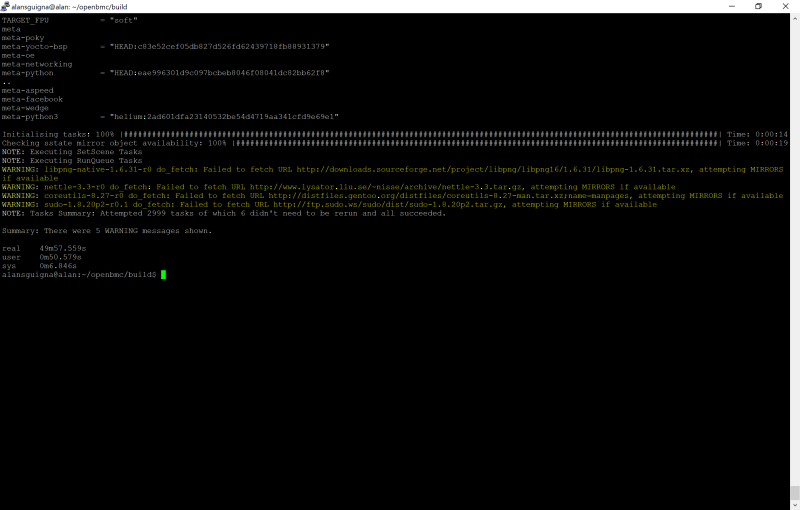

Then, the build succeeded:

I was impressed at how easy it was to configure and run the Yocto OpenBMC build. Which is impressive, given the complexity of the Yocto project and the build process. There wasn’t much difference Graviton1 and eMAG in this respect; a testimonial on how far ecosystem support for Arm64 has come.

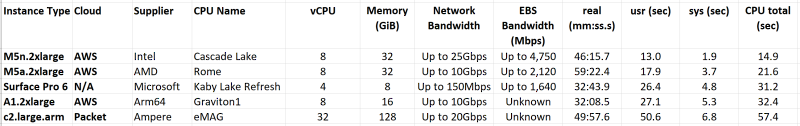

Without further ado, here is the summary table:

Again, we should take some of these results with a grain of salt. Certainly, some of the outcomes are anomalous.

But even though the results are not particularly scientific, they are still interesting. Some observations:

Intel Cascade Lake used the least amount of CPU time, but it was third-slowest in terms of real-time. Very strange.

Intel Cascade Lake had the speediest sys time (time spent in kernel space).

AWS’s Graviton1 was faster than the Ampere eMAG, even though the eMAG has a more powerful hardware configuration (vCPU, Memory, Network Bandwidth).

I still need to do some research on cost comparisons. I believe that AWS and Packet have fundamentally different pricing paradigms: Amazon charging for CPU time + storage cost + network bandwidth, etc., versus Packet just charging for real-time (i.e. time spent while the server is running, whether it’s executing a workload or not). For Packet, I think that you actually have to delete the server, to stop the credit card charges. So, I think that it’s apples-versus-oranges in terms of doing a comparison with the information that I have.

The fun thing about this is that Ampere is going to launch a new CPU soon. This will be an 80-core beast built with a 7nm process node. Will it take the crown, and be the fastest for my workload? It would seem possible. Time will tell.