As you know, I’ve done a lot of OpenBMC builds on my AMD Ryzen desktop PC, as well as my Surface Pro 6. I decided to tackle the same builds on Amazon Web Services (AWS), on virtual machines based on the Intel Cascade Lake CPU, as well as the AMD EPYC 7000. Which one is faster and cheaper?

I’ve always been fascinated by CPU performance benchmarking. Vendor X claims superiority over Vendor Y, based upon a pre-determined set of criteria. And Vendor Y then jury-rigs the criteria, to say that they’re better than Vendor X. There are so many variables regarding what is being evaluated: raw compute horsepower (IPC or # of threads or something else), power consumption, price, etc.; as well the intrinsic nature of the workload being undertaken. Some platforms are great for multi-threaded workloads, and weak on raw horsepower; others are tuned for bursty, or memory-intensive benchmarks; etc. It’s difficult, if not impossible, to create objective criteria to evaluate one CPU versus another, and say which one is “better”.

But that doesn’t mean that I can’t take advantage of what’s out there, to conduct experiments of my own. Thanks to AWS, I don’t need to own a high-powered Intel Cascade Lake server; I can just lease some cycles from them (actually, I do have easy access to these types of servers, but that’s a story for another day). And I won’t claim that these experiments are biased or unbiased in any way; they are just the results of one person’s attempt to be objective, and to learn something new.

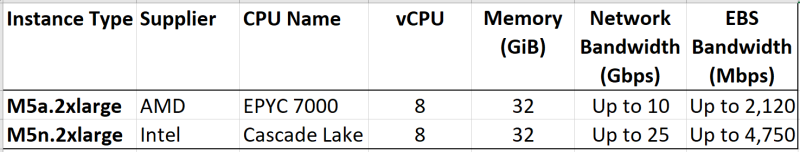

AWS allows you to create VMs based on what they call “Instances”; and these can be x86 (Intel or AMD) or Arm64 (Graviton, AWS’s own home-grown 64-bit Arm server CPU). You get to choose the configuration of these Instances. So, I picked a couple of Instances suitable for General Purpose Computing:

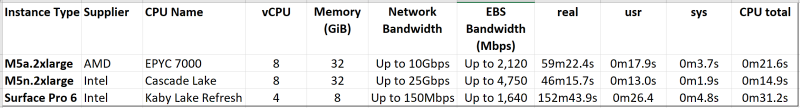

You can see that it’s not an apples-to-apples comparison. Although I could match up the number of vCPUs (essentially the number of threads) and the amount of RAM, they’re not evenly matched in terms of network bandwidth and EBS (Elastic Block Store) bandwidth. But, I decided to proceed anyway.

Now, setting up and building a Yocto OpenBMC image on AWS is a bit tricky. On my home desktop and work notebook, I’ve already configured the build environment so I can fire up bitbake pretty quickly. With AWS, it takes a while to get things set up the way I need them. Here are some tips below:

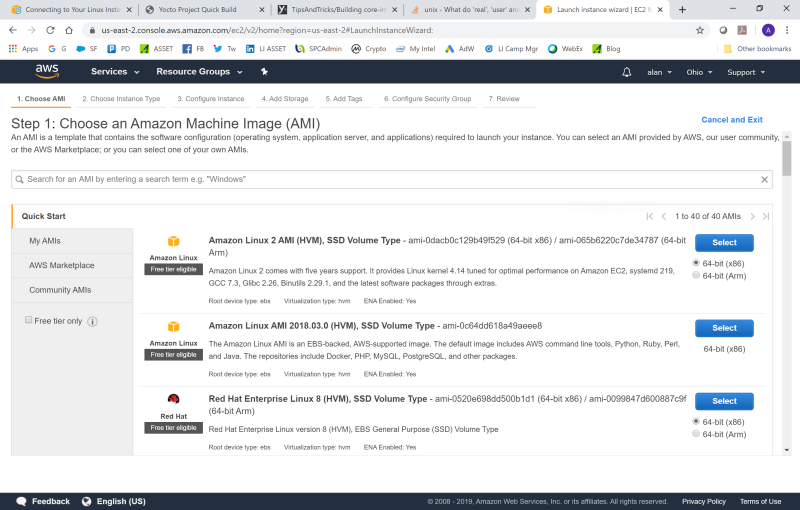

Configuring and launching the Instance is pretty easy. Once you have established your AWS userid, sign into the Console, and click on Launch Instance:

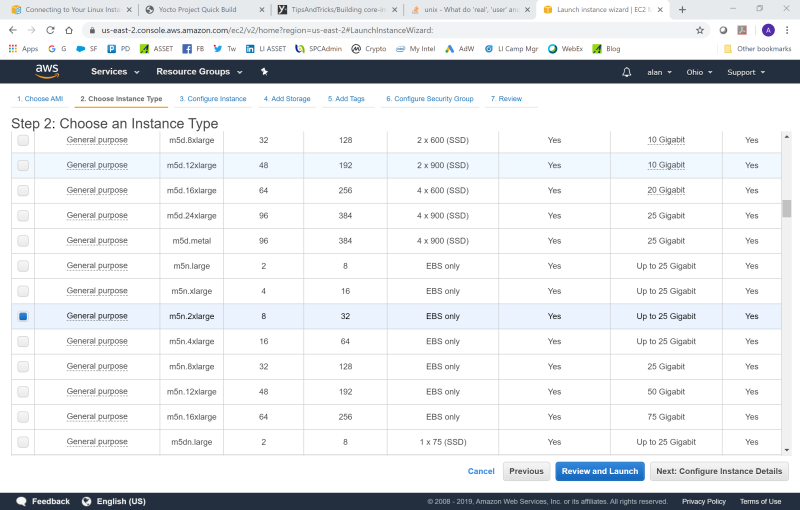

You’re given a choice of which Windows or Linux to choose, and whether you want 64-bit (x86) or 64-bit (Arm). In this case, I picked the Ubuntu Server 16.04 LTS, 64-bit (x86). Then, you’re given a choice of Instance Type. I chose the m5n.2xlarge first, to exercise the Cascade Lake build:

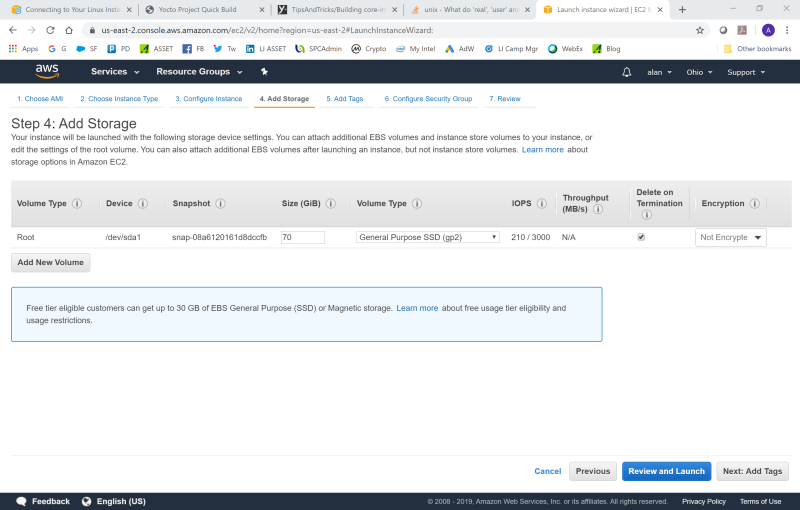

The next item for attention is the amount of storage needed. By default you get 8GiB of SSD storage. This is not enough to do an OpenBMC Yocto build. I bumped it up to 70GiB to be safe:

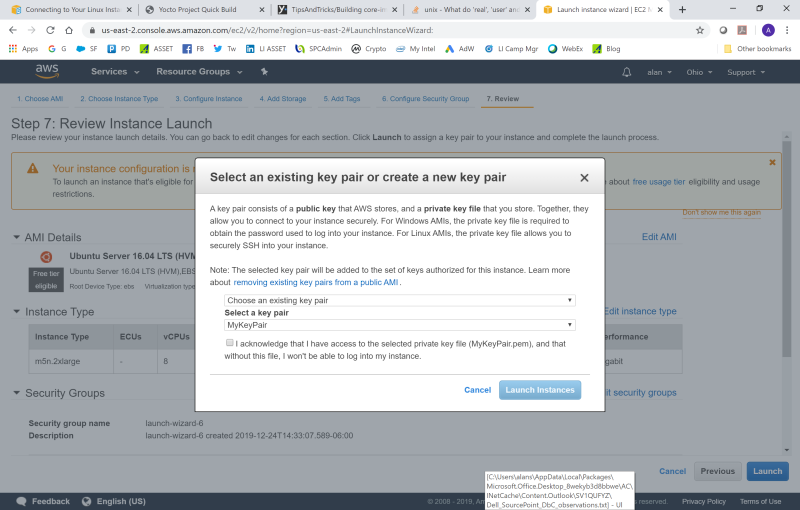

Then, you can just go ahead and click on the Review and Launch button. But, for security, first you must establish a secure connection to SSH into your server instance, via the creation of a public/private key pair. AWS will tell you this:

Instructions on how to do this, when you are first starting out, are here: User Guide for Linux Instances. If you’ve done everything right, you should now be in the Ubuntu shell!

One thing I learned after some experimentation is that, to establish the pre-requisite packages for the build, you must first do:

$ sudo apt-get update

$ sudo apt-get upgrade

Note that the “update” updates the list of available packages and their versions, but it does not install or upgrade any packages. The “upgrade” actually installs newer version of the packages you have. After updating the lists, the package manager knows about available updates for the software you have installed.

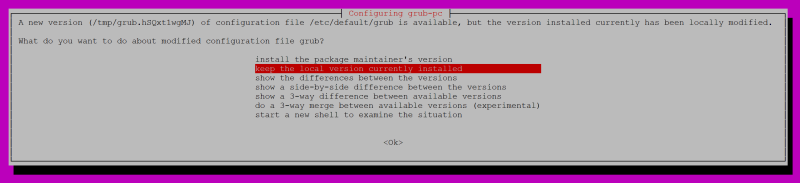

After I did the upgrade, I saw this warning:

I found after some experimentation that either one of the first two items works fine.

When that’s done, you can proceed with installing the build prerequisites via:

$ sudo apt-get install gawk wget git-core diffstat unzip texinfo gcc-multilib \

build-essential chrpath socat cpio python python3 python3-pip python3-pexpect \

xz-utils debianutils iputils-ping python3-git python3-jinja2 libegl1-mesa libsdl1.2-dev \

pylint3 xterm

Then you can go ahead and clone the Facebook OpenBMC repository, run the initialization script, and then do the build via the following commands (for more details, see the READMe.md at https://github.com/facebook/openbmc:

$ git clone -b helium https://github.com/facebook/openbmc.git

$ cd openbmc

$ ./sync_yocto.sh

$ source openbmc-init-build-env meta-facebook/meta-wedge

$ time bitbake wedge-image

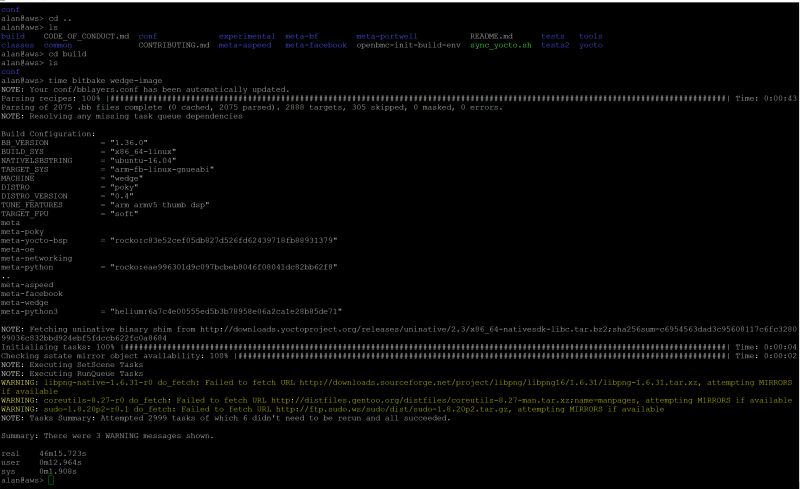

And voila, it works:

Note that I used the Linux “time” command to capture different aspects of how long the build took:

“real” is wall clock time – time from start to finish of the call. This is all elapsed time including time slices used by other processes and time the process spends blocked (for example if it is waiting for I/O to complete).

“user” is the amount of CPU time spent in user-mode code (outside the kernel) within the process. This is only actual CPU time used in executing the process. Other processes and time the process spends blocked do not count towards this figure.”

“sys” is the amount of CPU time spent in the kernel within the process. This means executing CPU time spent in system calls within the kernel, as opposed to library code, which is still running in user-space. Like “user”, this is only CPU time used by the process.

So, “user” + “sys” tells you how much actual CPU time was used.

So next I went ahead and repeated the process for the AMD EPYC 7000. And, just for comparison’s sake, I fired up and timed a similar build on my Surface Pro 6, running VirtualBox, using four threads and with 8GB of RAM. Summarizing the results shows:

Very interesting! Both EPYC and Xeon outperformed the Surface Pro 6, as you would expect. And the Cascade Lake was faster than the AMD Rome CPU, by a significant margin. I’m not sure why this is. Certainly, the AWS Instances position Intel to have a significant advantage over AMD in terms of Network Bandwidth and EBS Bandwidth, even with the vCPU and Memory being the same.

So, which one is better? It’s impossible to tell from this benchmark.

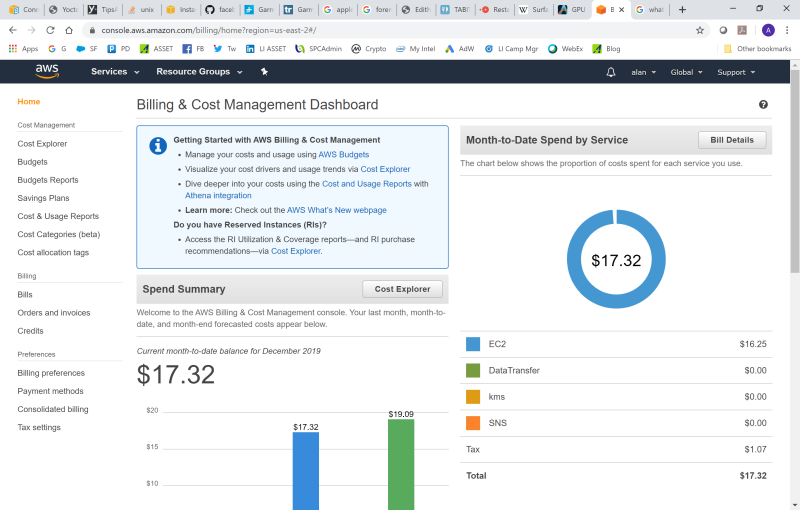

Looking at it another way, for this given workload of building OpenBMC images, and given the Instances available on AWS, what’s the best solution of the two? That might be evaluated based upon the amount of time to run the job times the cost of the job; the smaller number being better. Or it could just be based upon the cost of the builds, if the amount of time to run them wasn’t considered important. I could not easily determine the cost of the jobs. I went into the AWS Billing Console and refreshed it before and after the build completions, and it didn’t budge. Only later in the day, the money spent would seem to randomly update:

Even when the Instances were in the Stopped state, I’m still spending money. That’s probably due to the storage being used up, I’m guessing.

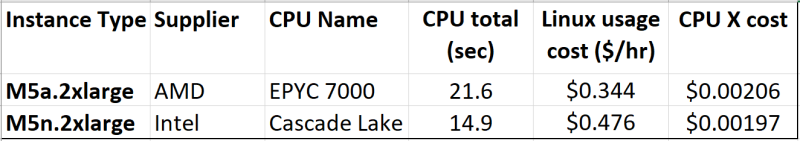

Let’s look at this assessment by using actual cost numbers published by Amazon at https://aws.amazon.com/ec2/pricing/on-demand/. An updated table is below:

It looks like Intel has the edge, in terms of CPU X cost, but by just a little bit. They’re within about 4% of each other. And, as mentioned above, if I didn’t particularly care how long the builds took (I rarely do more than one or two a day), I might not care if one Instance ran ten or twenty minutes faster than the other.

And also to mention, this benchmark applies to a workload that represents a unique set of requirements on CPU horsepower, number of threads, memory, network downloads, storage reads/writes, etc. A different workload might yield different results.

Finally, this represents the current “state of the nation” in terms of CPUs that are available from Intel and AMD. What happens when AWS supports AMD Milan, Intel Ice Lake, and other future processors? Things will get really interesting then!

So, what’s next? Should I try additional benchmarking to see if I can normalize out the effect of the different number of threads, amount of memory, and network and EBS bandwidth between the two suppliers? And, maybe I should try out the Graviton Instances to see how Arm64 compares with x86? Stay tuned.