Heterogeneous Computing refers to systems that use more than one kind of processor, typically a combination of CPUs and GPUs, sometimes on the same silicon die. Is this solution optimized for supercomputing applications?

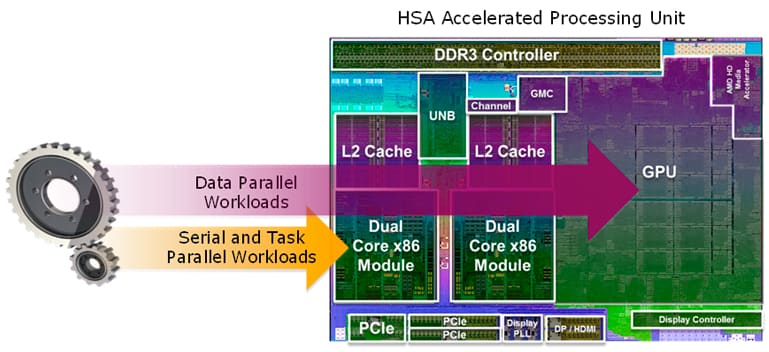

Heterogeneous computing systems are multi-core systems that gain performance not just by adding cores, but also by incorporating specialized processing capabilities to handle particular tasks. Heterogeneous System Architecture (HSA) systems utilize multiple processor types (typically CPUs and GPUs), sometimes on the same silicon die, to provide the best of both worlds: GPU processing, apart from its well-known 3D graphics rendering capabilities, can also perform mathematically intensive computations on very large data sets, while CPUs can run the operating system and perform traditional serial tasks. A diagram of this is below (courtesy of AMD):

Vector processors like those in advanced GPUs can have thousands of individual compute cores, each of which can operate simultaneously. This makes GPUs ideally suited for computing tasks that deal with a combination of very large data sets and intensive numerical computation requirements, ideal for supercomputing applications. GPUs do this with much lower power consumption relative to the serial processing of similar data sets on CPUs. This is what allows GPUs to drive capabilities such as incredibly realistic, multiple display stereoscopic gaming. And while their value was initially derived from the ability to improve 3D graphics performance by offloading graphics from the CPU, they have become increasingly attractive for more general purposes, such as addressing data parallel programming tasks.

But CPUs and GPUs must play well together. Typically, a program running on the CPU queues work for the GPU using system calls through a device driver stack managed by a completely separate scheduler. This introduces significant dispatch latency, with overhead that makes the process worthwhile only when the application requires a very large amount of parallel computation. Further, if a program running on the GPU wants to directly generate work-items for the CPU, this requires special treatment. All devices within a heterogeneous architecture must share a virtual address space, providing “zero-copy” overhead of moving large blocks of data. And, since software development is such a large part of the investment in HPC applications, success of heterogeneous computing requires providing support for mainstream programming languages and libraries. This in turn provides a transparent path for millions of developers (along with their existing code) to directly benefit from the efficiencies of heterogeneous computing.

Enter the Heterogeneous System Architecture (HSA) Foundation, a not-for-profit industry standards body focused on making it dramatically easier to program heterogeneous computing devices. Today’s membership in the HSA Foundation consists of 40 technology companies and 17 universities; the founding members are AMD, ARM, Imagination Technologies, MediaTek, Texas Instruments, Samsung Electronics and Qualcomm.

The HSA Foundation is fulfilling its goal by delivering HSA optimized programming specifications for today’s available heterogeneous languages: OpenCL™ and C++ AMP. Going forward, I expect that the HSAF members will expand the set of developer tools to encompass many other languages and libraries across multiple software domains and segments. They have also recently released a new HSA 1.1 specification, significantly enhancing the ability to integrate open and proprietary IP blocks in heterogeneous designs.

And why isn’t NVIDIA part of the HSA? I think they want to go their own route, and they are in a very strong position. Their NVLink interconnect runs 5-12 times faster than traditional PCIe Gen3, and have a collaboration with IBM’s Power8 CPU and their upcoming Pascal GPU.

And we shouldn’t count Intel out either. The Xeon Phi “Knights Landing” chip comes with 72 Airmont (Atom) cores with four threads per core, and supports up to 384 GB of "far" DDR4 RAM and 8–16 GB of stacked "near" 3D MCDRAM. Each core also has two 512-bit vector units which support AVS-512 SIMD instructions. So this is a screamingly fast heterogeneous computing device as well.

Debugging such platforms will be a challenge if different test access ports (TAPs) are required for x86, ARM, NVIDIA, and a plethora of heterogeneous devices (maybe within one SoC!). Check out our Embedded TAPs eBook for a proposal on unifying a heterogeneous devices’ different chip-level domains.

It’s a very fascinating field, with some large players playing for large stakes. It will be interesting to see how this shakes out over the next few years.