Off-the-shelf memory initialization routines typically do not provide the needed diagnostic resolution if a structural failure causes the system to “blue screen”. How can test engineers overcome this?

Design engineers often must debug circuit boards that will not boot the bare metal firmware or have no working BIOS or boot loader at all. And, most memory controller firmware code (often called Memory Reference Code, or MRC) is dedicated to the sole task of initializing the DRAM, and does not provide any meaningful diagnostics in the presence of a structural fault on the memories. This presents a challenge, since in the presence of these defects, the system will not boot, or an entire memory channel will be mapped out, which eliminates the possibility of using functional test to identify the faulty net.

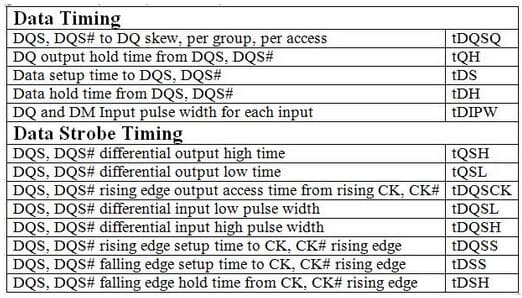

In earlier generations of DDR, it was possible to place In-Circuit Test (ICT) pads down on the memory nets, which allowed structural defects to be detected. This is no longer recommended or even possible. With today’s DDR4 memory going up to 3,200 MT/s (on a parallel bus!), test pads will disrupt its signal integrity. Memory controller initialization firmware today performs some amazing feats to minimize the overall bit error rate – for example, output driver strength and on-die termination are set at initialization and then calibrated periodically to take into account voltage and temperature variations – but introducing thousands of points of discontinuity is no longer practical. In addition to VREFDQ, ODT, and a host of timing parameters to take into consideration (see the below table), memory controller initialization code can’t optimize system margins in the presence of such bad design practices.

A practical alternative might be boundary-scan test (BST), also known as JTAG. BST is a structural test technology which can easily detect shorts and opens. But, on some designs, it may not be deployed – for example, because the memory controller silicon might not support boundary scan, its BSDL may be incorrect, or perhaps the chip is not in its socket at the structural test step within manufacturing.

In theory, the BIOS or memory initialization firmware could detect defects, and report them to the bit level. But, in practice, this does not happen. The MRC is written to bring up the memory as quickly as possible, and to ensure that system margins are maximized. If a data bit fault is present on the bus, the MRC might disable the rank associated with the failure, perhaps giving only the failing rank as a fault indicator; or, it might halt the boot process completely, and provide little or no diagnostic information. At best, since memory training is done on a strobe (DQS) basis, diagnostics might report a failing DRAM memory chip (as a chip may be assumed to be accessed by an individual strobe). It is beyond the scope for the MRC to use any pattern-sensitive testing algorithms to isolate the fault.

The remaining option revolves around conventional functional test, which runs on top of the boot loader or the operating system. Algorithms such as “walking ones, walking zeroes” and full cell tests can easily isolate issues to the bit level. But, as described above, these tests require the memory to already be initialized – if there is a bad rank, the memory test cannot run on it, since that rank is inaccessible. So, although these tests provide the granularity needed, they are inadequate if the system has “blue screened” or mapped out the afflicted memory channel.

How is this dilemma overcome? The solution revolves around executing a conventional functional RAMBusTest, but below the boot loader or operating system. More details on the technology implementation will be in Part 2 of this blog. In the meantime, for a generic treatment of memory testing, please see our eBook, Testing High-Speed Memory with Embedded Instruments.

How is this dilemma overcome? The solution revolves around executing a conventional functional RAMBusTest, but below the boot loader or operating system. More details on the technology implementation will be in Part 2 of this blog. In the meantime, for a generic treatment of memory testing, please see our eBook, Testing High-Speed Memory with Embedded Instruments.